First there was a face

Throughout human history, access control is standing on three fundamental factors: “Something you know, something you have or something you are”. People evolved from using primarily their appearance (something they are) to hundreds or even thousands years of physical keys and “something you have” factor domination, while passwords or secret keys were used occasionally.

Modern society quickly adopt new kinds of implementations of protecting their properties and data. With all sorts of computers automating many aspects of our life, it took less than 40 years to make passwords, pins and even our own biometric data a usual thing for many people around the world. What doesn’t change is all three factors’ major usage patterns and flaws:

- We have to memorize passwords, secret words and pins. Simple passwords can be “cracked”, complex are easy to forget.

- Keys must be with us. As any physical thing, it may be stolen, lost or fabricated.

- Physical appearance was the first factor humanity started using, and we still use it every day with our family, friends and coworkers. Our fingers, faces and eyes are always with us. They are relatively hard to fake, and virtually impossible to steal. However, we only recently started using this factor for automated access control. It took awhile before machines learned how to confidently recognize fingerprints, iris and faces. There is still a long way to go, but we can certainly say that the factor of what we are is in many ways the present and definitely the future of access control.

Fingerprint, iris scan and face image are the most developed biometric authentication techniques. Choosing between robustness, performance, anti-spoofing, convenience and privacy, the industry sees facial identification, with its latest advancements, as a balance between all important factors.

Facial Recognition is one of the most developed, widely used and controversial areas of Machine Learning. There is still an ongoing debate if the society should widely adopt it. In fact, at the same time, most of us use different kinds of facial algorithms every day – in consumer-grade cameras, smartphones and PCs, ranging from simple face tracking to advance identification with technologies like Apple Face ID and Microsoft Windows Hello.

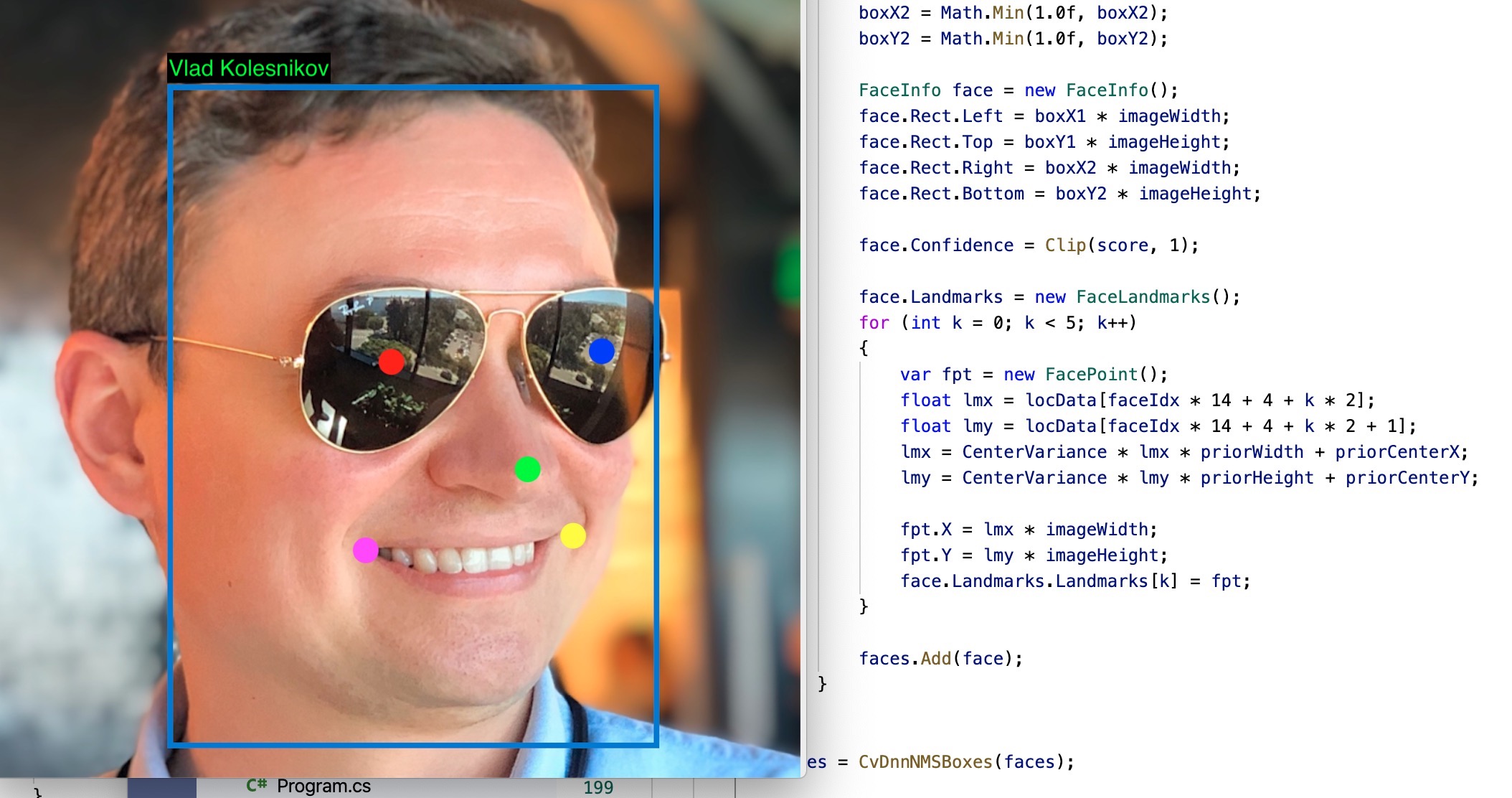

My name is Vlad Kolesnikov. I work at Microsoft, in Commercial Software Engineering team.

Here I start a series of short stories describing my journey of developing a “weekend project” - Facial Recognition Access Control service.

I simply want to be able to unlock doors, apps and even web sites using my face. And I want to take it from a single-app demo to a service that can handle organizations with thousands of people to identify and doors to control.

My focus here is on building a toolset around a variety of existing computer vision techniques, media frameworks, ML models and cloud services, so they all can run all these instruments as a whole orchestra.

Computer Vision in production

If it sounds interesting, let’s walk along this path together learning what it takes to build a biometric access control service, from getting video frames to identifying a person, on scale from a single family to thousands people and devices. And this scalability part is very important.

Nearly everything what we make in Commercial Software Engineering with our customers is getting deployed and used on scale in production. Of course, there will always be proof-of-concepts and demos, simple (or not that simple) services and apps made for upskilling purposes while learning a new technology. However, there is huge difference between solving a single Computer Vision problem and running an end-to-end solution, especially when we are talking about a hybrid system with device and cloud components. That’s what I will be primarily working on: integrating a variety of components across computer vision, media capture, device management, cloud-based AI, API management, storage, and security.

This series is still just a peak to what it takes to run a production-ready biometric security solution. Before you make it a real production solution, there is way more problems to solve on device management, anti-spoofing, resiliency, DevOps, security and privacy.

It is solely a personal project, based on my personal learnings and experiments, with no guarantee or intent to be used for protecting anything that is important to you. I will not be liable for damages or losses arising from your use or inability to use any code or practices described here or on linked resources.

Hardware and software

To give everyone some context, we are going to use the following components:

-

Using a web camera for development is perfectly fine. Later on I will switch to Amcrest IP Camera for video capture and streaming via RTSP. A USB WebCam would be good too, but I’m really curious how it works with a real security camera. This one is close to commercial-grade cameras, costs less than $100 and supports WiFi connectivity (there is a Power-over-Ethernet version asl well).

-

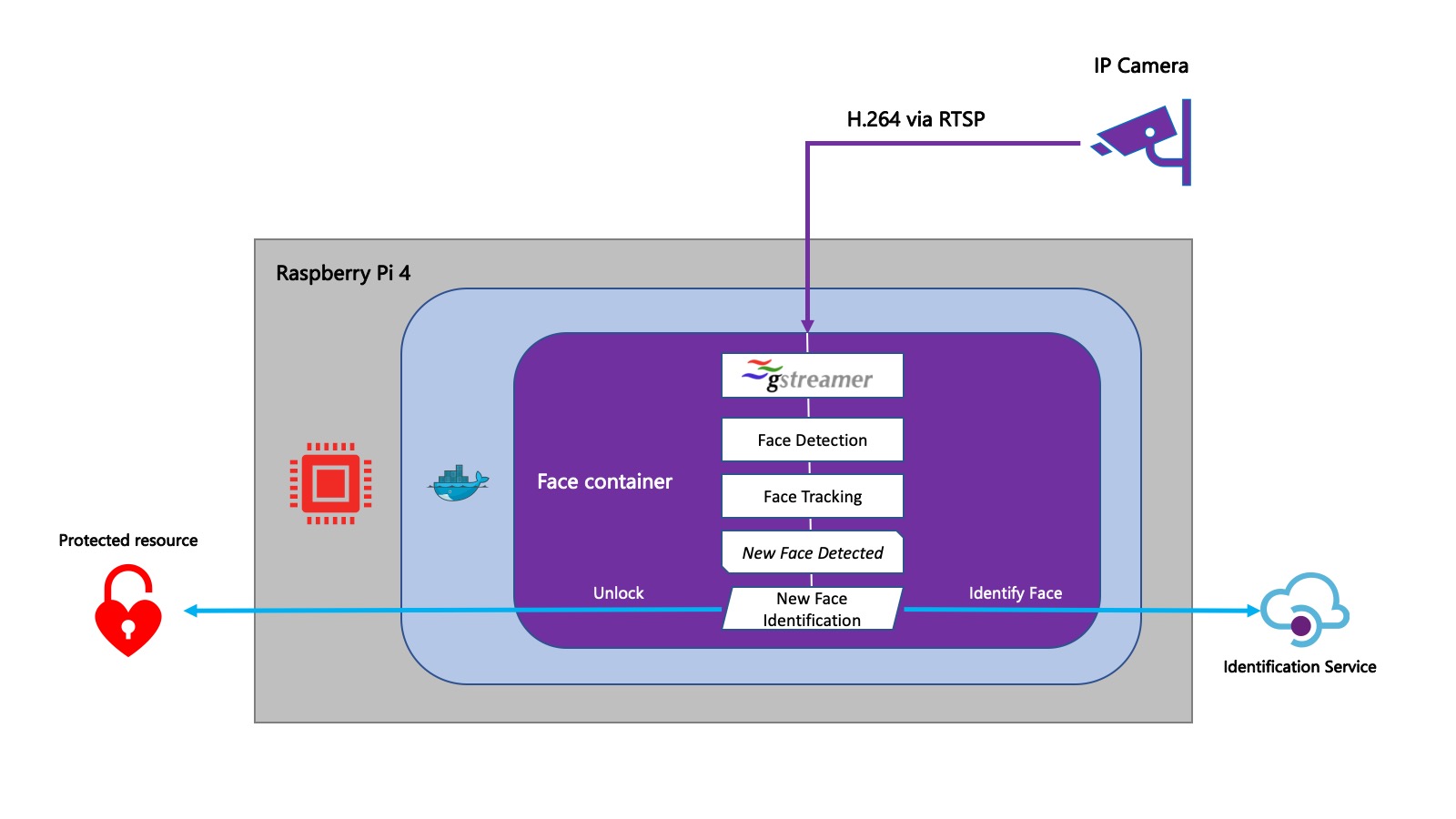

Raspberry Pi 4, our Edge device for performing all computational tasks starting from video decoding and up to sending face images for identification.

Raspberry Pi 4 is powered by Quad core Cortex-A72 ARM64 CPU. Another example is NVIDIA Jetson Nano. Both are very popular when it comes to IoT development and prototyping, compact, have reasonable price tag and a lot of processing power at the same time. Although they only cover one of three equally possible scenarios. Two more cases to think about:

- The device has a direct connectivity with the camera, doesn’t have to decode video and has all its power to perform image processing.

- A single powerful co-located machine processes video streams from multiple cameras. It is usually equipped with a good GPU and capable of simultaneous video decoding of multiple video streams.

In all cases we are talking about the IoT deployment model. And when I say ‘IoT’, I mean the way we manage devices using a centralized remote [cloud] infrastructure. I work at Microsoft, so here we’ll be using…

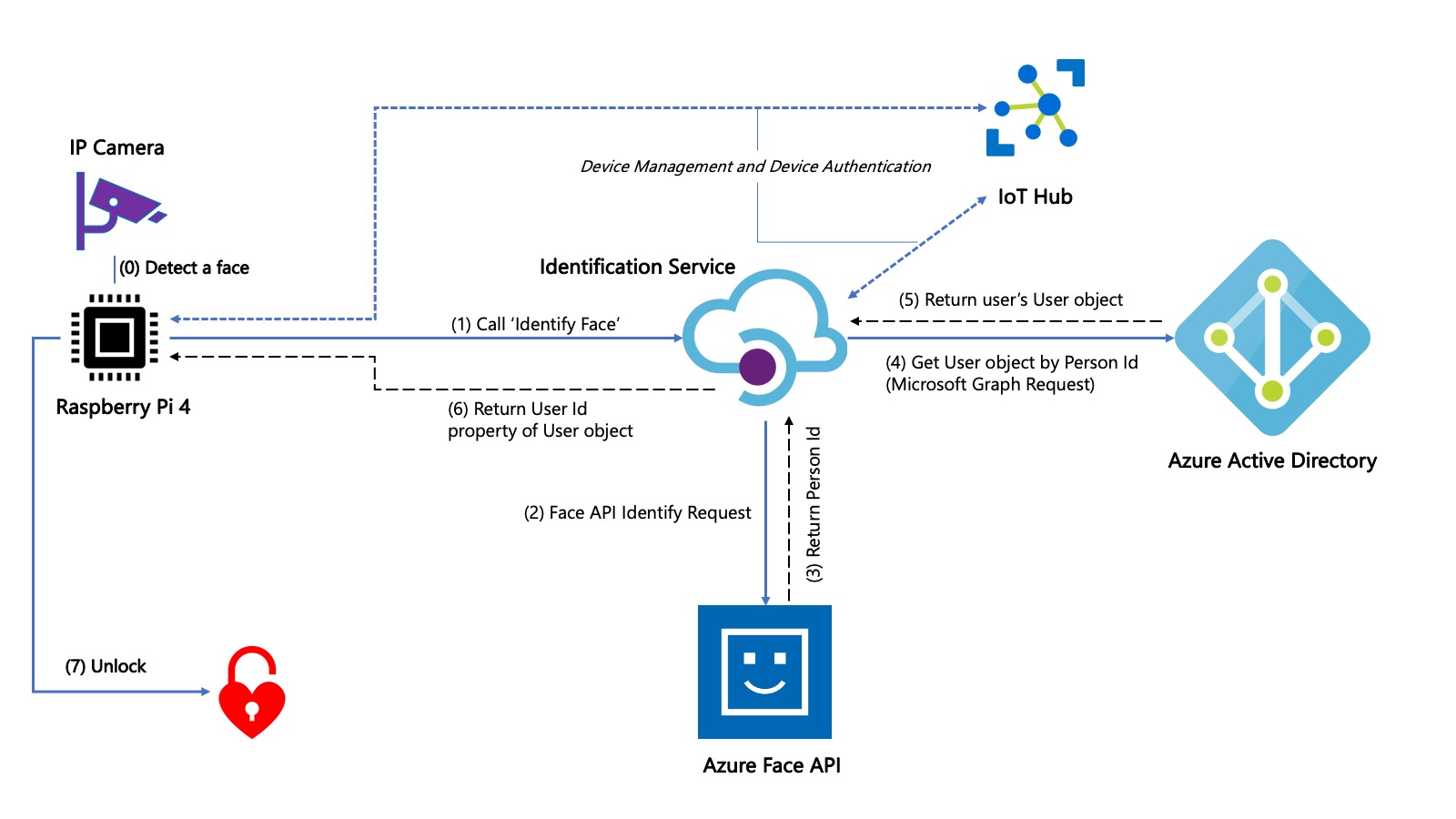

- Azure IoT Hub as a central authentication and message hub for bi-directional communication between our service and devices it works with. IoT Hub will help us authenticating devices and service device-side software components.

- GStreamer for video capture and decoding. Real deployments doesn’t always have a luxury of working with USB-connected cameras (there are also things like Camera Link). More often they stream video from a network of CCTV-grade devices. We need a tool that can handle both scenarios. GStreamer is a proven open-source multimedia framework supported by major industry players and the community.

- OpenCV for performing basic image processing and, most importantly, running DNN inference for Face Tracking.

- Azure Cognitive Services Face API - for facial identification. You can try it right now, in your browser. There is a plenty of samples you can look at and use as a starting point for your app.

- Azure Active Directory for providing user identity and authentication services. It will help connecting abstract “Person IDs” in Face API to real identities as Active Directory manages them.

Why Azure Face API

Basic computer vision tasks, face detection, motion tracking etc. are certainly doable today on pretty low-power hardware with performance and confidence that is enough for most of reasonably framed deployments.

Out of the entire Facial Recognition space, identification is the most complicated, resource-intensive and controversial part. When you have just a few people to identify, training a publicly available model is perfectly fine. Moving from 10 person group you personally know to thousands of people makes it way harder due to at least following factors:

- In order to train a base model with low False Acceptance and False Rejection Rates (FAR and FRR), you need a training set beyond those publicly available today. Somehow it’s not a very well-know fact, but the vast majority of facial training datasets today are allowed to be used only for research and in non-commercial applications.

National Institute of Standards and Technology (NIST) runs a series of tests of Facial Recognition algorithms from different vendors. Recent NIST research demonstrated that some facial recognition technologies have encountered higher error rates across different demographic groups. As documented in the “Gender Shades” research, this problem arises when trying to determine the gender of women and people of color. Microsoft heavily invests into Face API training datasets and model quality.

- Machine Learning and especially cloud-based biometry raise a lot of concerns on privacy, security, fairness and transparency. Microsoft developed Responsible AI Principles - our commitment to the advancement of AI driven by ethical principles that put people first.

Microsoft is are operationalizing responsible AI at scale, across Microsoft. This is accomplished through Microsoft’s AI, Ethics, and Effects in Engineering and Research (Aether) Committee, and our Office of Responsible AI. Aether is tasked with advising Microsoft’s leadership around rising questions, challenges, and opportunities brought forth in the development and fielding of AI innovations. The Office of Responsible AI implements our cross-company governance, enablement, and public policy work. Together, Aether and the Office of Responsible AI work closely with our engineering and sales teams to help them uphold Microsoft’s AI principles in their day-to-day work. An important hallmark of our approach to responsible AI is having this ecosystem to operationalize responsible AI across the company, rather than a single organization or individual leading this work. Our approach to responsible AI also leverages our process of building privacy and security into all of our products and services from the start.

- Lastly, you need a good machine to run a robust facial recognition model, so you end up always hosting and managing servers for facial identification purposes. I mean, it is a valid scenario, we even have Face API container that still provides the same REST API.

A note on OpenCV

Adrian Rosebrock and Satya Mallick run two priceless sources of knowledge – PyImageSearch and LearnOpenCV where they give you practical examples of solving Computer Vision problems using OpenCV and Deep Learning. If you are just getting into Computer Vision, I highly recommend these resources.

OpenCV is being a great library that democratized Computer Vision and accumulating the wisdom of algorithms with continuous contribution from research and development community. It’s written in C++, leverages hardware acceleration, ported to all major platforms and has wrappers for many programming languages.

Dlib is definitely worth knowing about as well. It’s not as popular as OpenCV, although has its advantages including those in implementations of facial recognition algorithms.

While there are more specialized frameworks, especially when it comes to running DNN inference, OpenCV is a robust integrated library with features and performance that will help us at a lot of important steps - right after the video decoding, up to the moment when we send a face picture to Azure Face API for identification.

The scope

There will be 4 major pieces in our solution:

- Video streaming and processing on the device, including face detection.

- Face detection is the biggest Computer Vision and Machine Learning exercise in this series. We’ll get to learn a few CV tricks and even train our own face detection model.

- Facial Identification service in Azure (yes, we won’t talk to Face API directly).

- Device deployment and management using Azure IoT Hub.

- Face enrollment app for adding people’s images to Face API and connecting them with identities in Active Directory.

Looks like a long story, and it is! We are going to be agile and can adjust this list on the go as needed.

Getting started on the device

“How about some code?” you may ask 😃 Here we go!

We start at #1 from the list above:

- Capturing video using GStreamer

- Performing Face Detection (a big one!) using a couple of different methods

- Adding Face Tracking to avoid duplicating requests for the same face

- Training our own face detection model

First stop - Part 1: Capturing video using GStreamer

Page header photo is ‘Faces’ by CasparGirl (CC-BY 2.0)